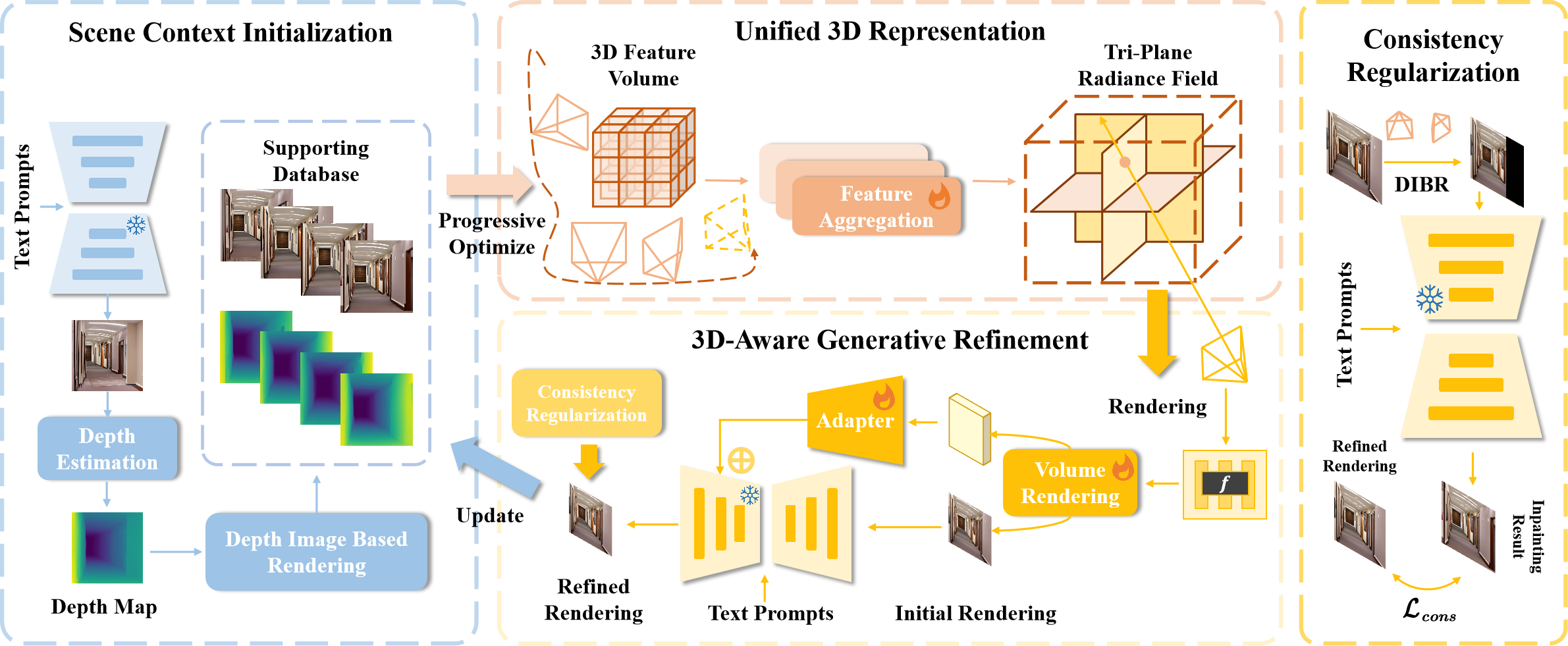

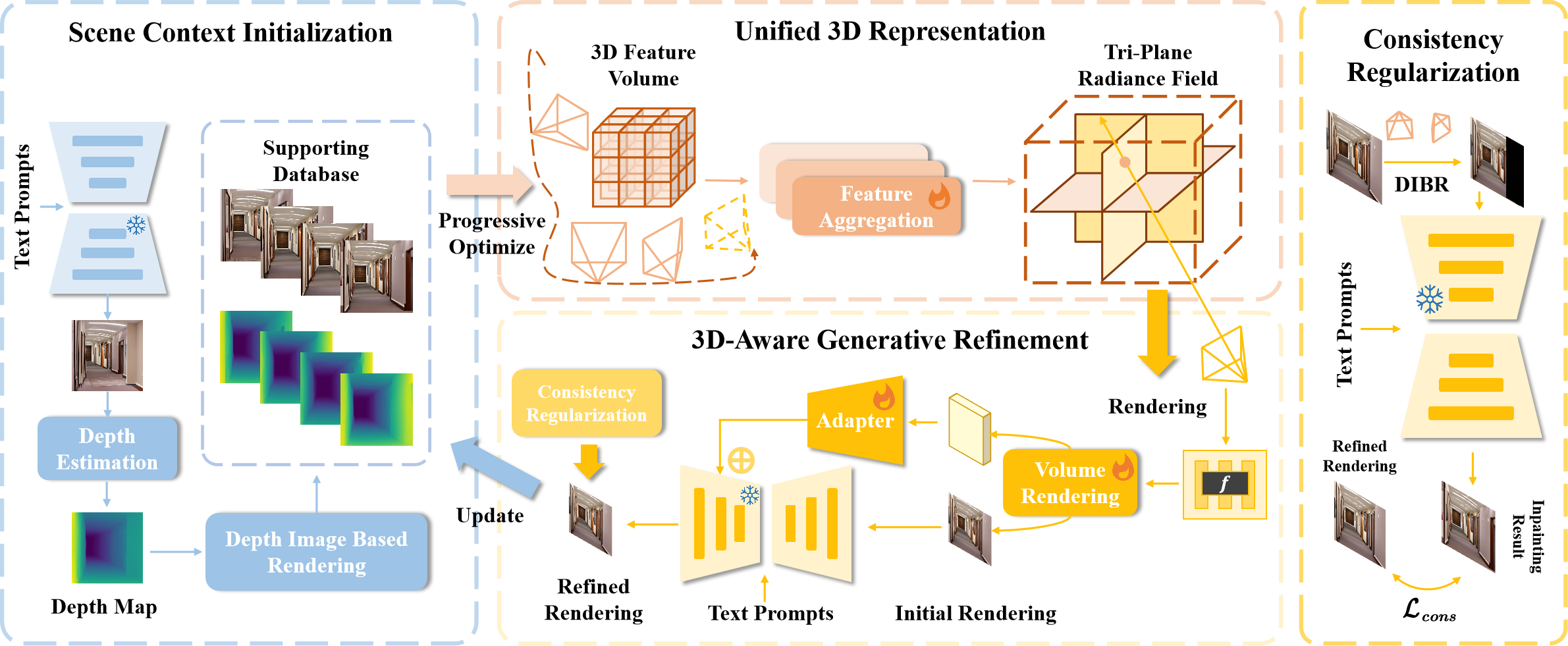

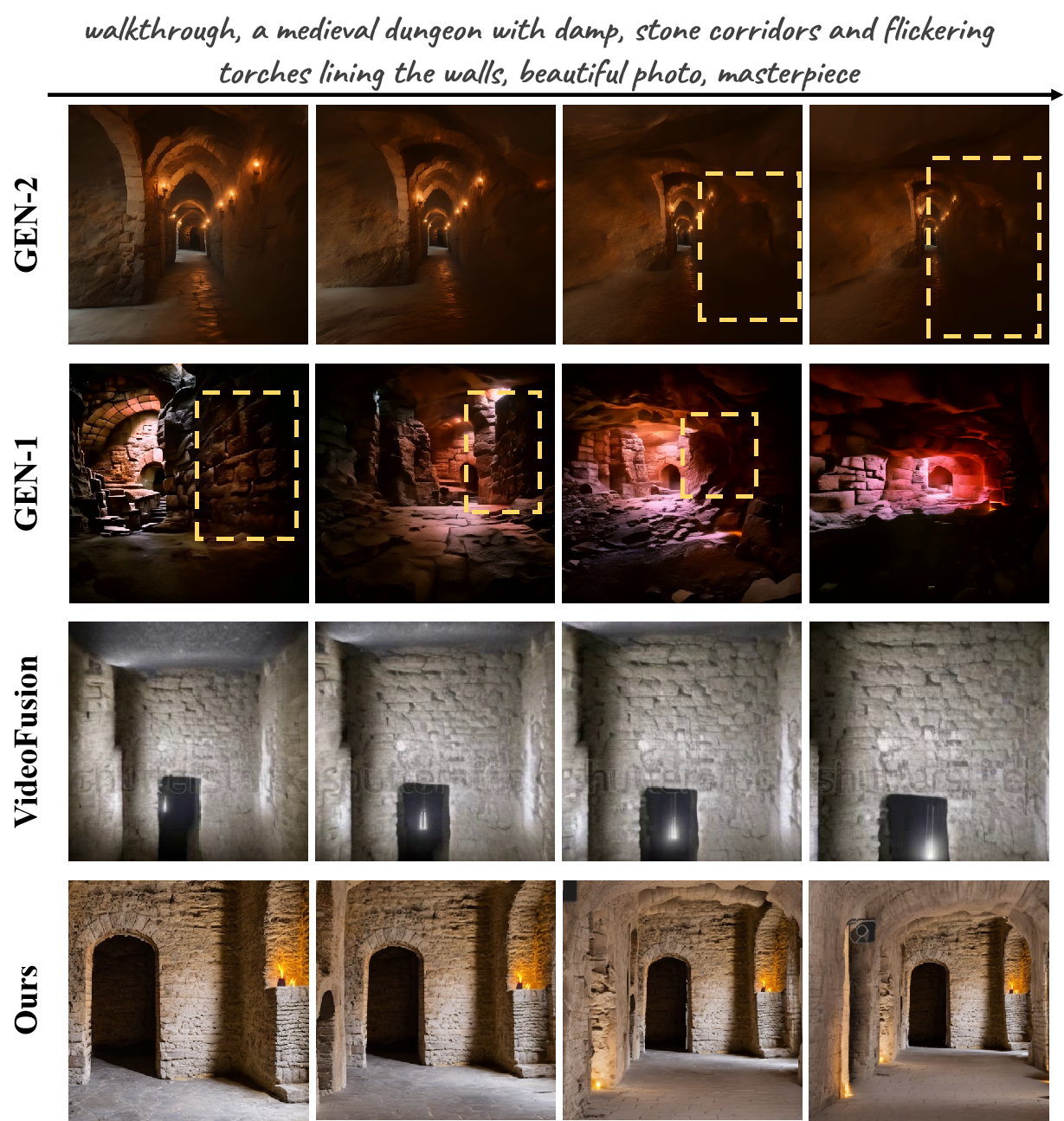

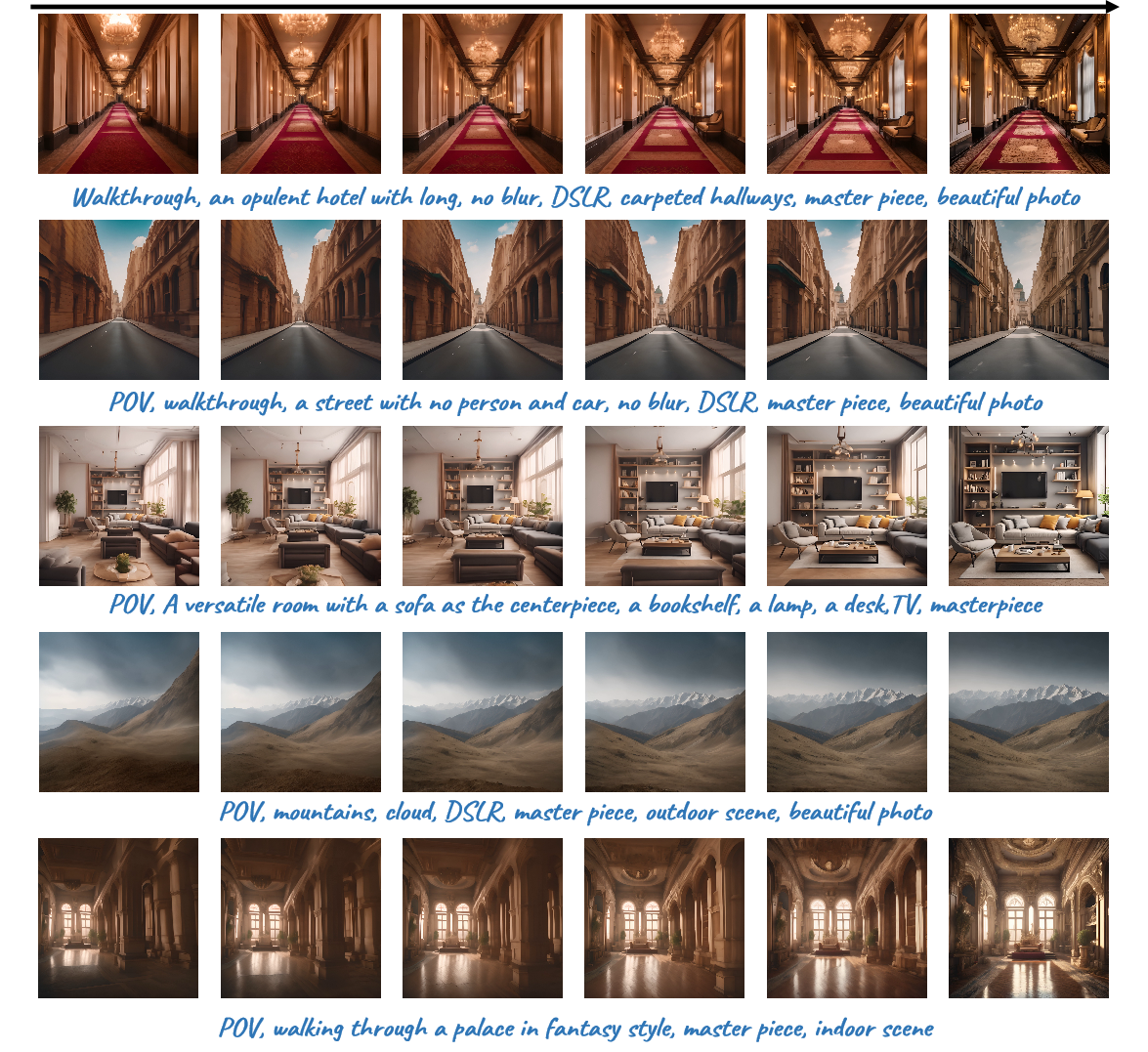

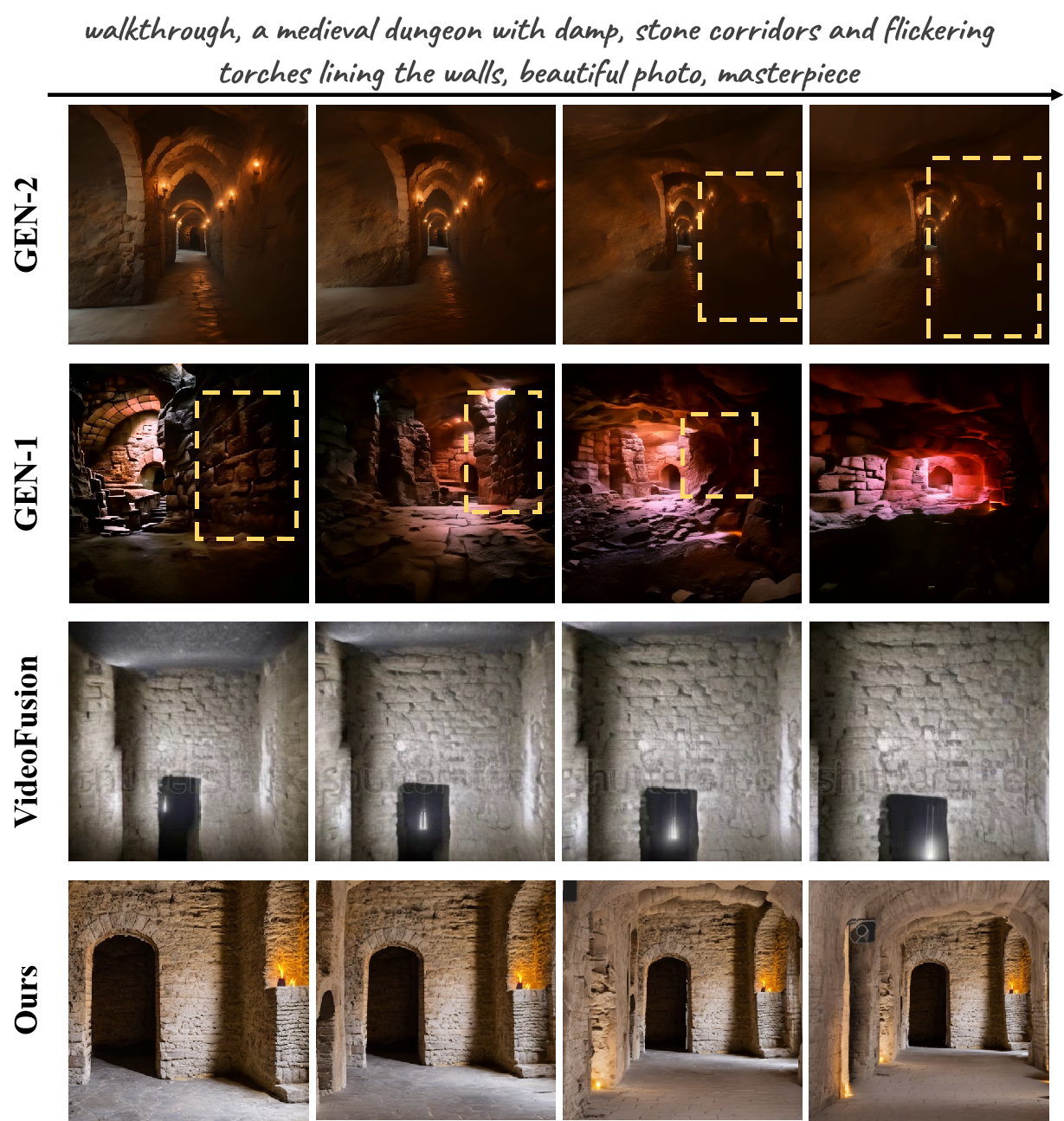

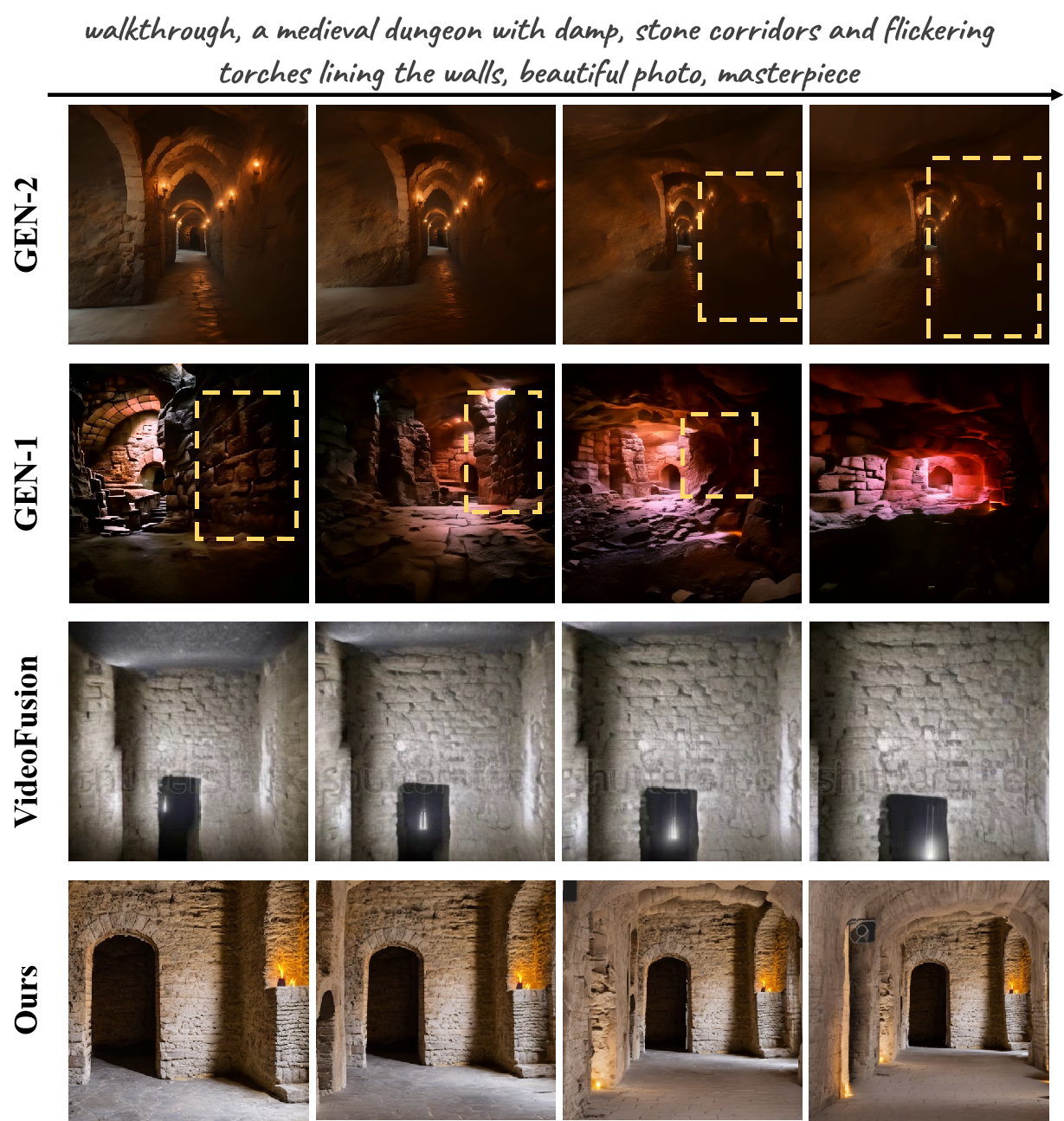

Text-driven 3D scene generation techniques have made rapid progress in recent years, benefiting from the development of diffusion models. Their success is mainly attributed to using existing diffusion models to iteratively perform image warping and inpainting to generate 3D scenes. However, these methods heavily rely on the outputs of existing models, leading to an error accumulation in geometry and appearance that prevent the models from being used in various scenarios (e.g., outdoor and unreal scenarios). To address this limitation, we generatively refine the newly generated local views by querying and aggregating global 3D information, and then progressively generate the 3D scene. To this end, we first employ a tri-plane features-based NeRF as a unified representation of the 3D scene to constrain global consistency. After that, we propose a generative refinement network to synthesize new contents with higher quality by exploiting the natural image prior of the 2D diffusion model as well as the global 3D representation information of the current scene. Extensive experiments demonstrate that compared to previous methods, our approach supports more varieties of scene generation and arbitrary camera trajectories with improved visual quality and 3D consistency. Codes will be released.

BibTex Code Here